Associate Professor Laila Shereen Sakr reimagines artificial intelligence as a community-governed tool grounded in data feminism.

In the current landscape of artificial intelligence, the prevailing metaphor is the "black box" — an opaque system where data is ingested and answers are extracted, often with little visibility into the ethical costs or citational origins of that knowledge.

Laila Shereen Sakr, an associate professor in the Department of Film and Media Studies at UC Santa Barbara, is building a glass house instead.

Building on her 15-year archive platform R-Shief, Sakr is launching Contrapuntal, a new "human-centered" AI platform designed to democratize access to multilingual, culturally diverse datasets. Unlike commercial models that often obscure their sources, Contrapuntal is grounded in data feminism and decolonial theory. It is designed to ask not just for answers, but "questions of our questions," bridging humanistic critique with machine learning.

“Rather than extracting from communities, Contrapuntal turns AI development into shared study,” Sakr said. “We are building an undercommons where scholars co-design governance frameworks, datasets and storytelling tools that return knowledge to the people who create it.”

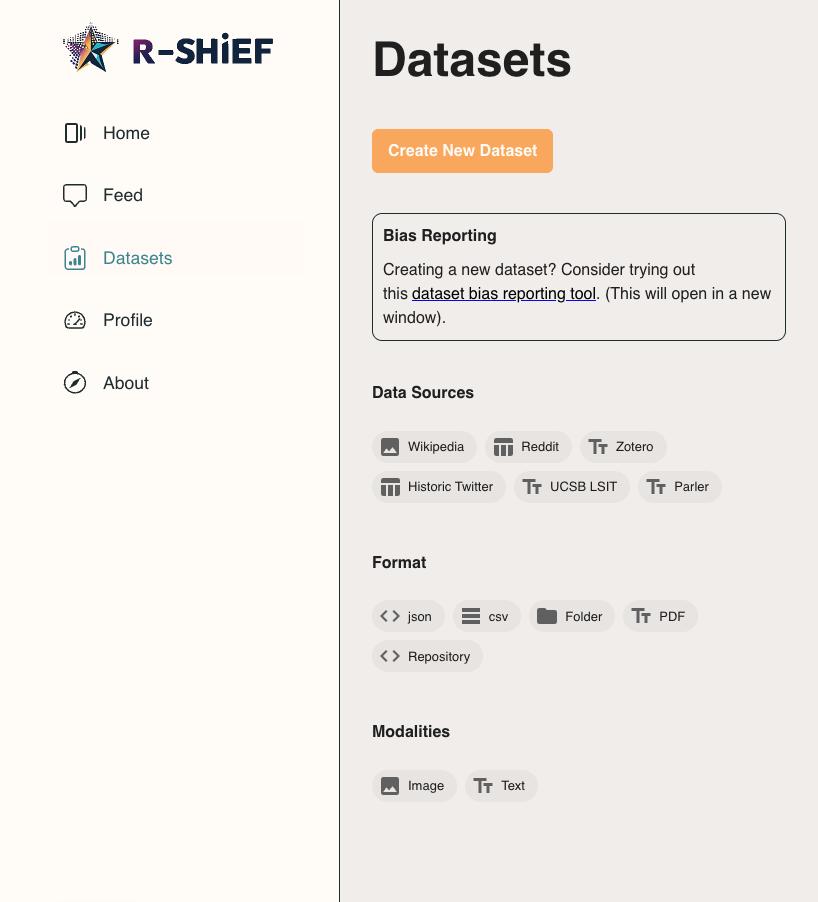

The platform operates on a transparency loop. Users begin by navigating curated datasets — ranging from Bluesky and Reddit to the Internet Archive — or uploading their own public files. From there, the AI assists in creating a "data story," visualizing connections and narratives while maintaining a strict lineage of citation. It is a tool designed to resist the erasure common in algorithmic sorting.

The Contrapuntal dashboard for Datasets emphasizes ethical use, featuring a prominent link to the Bias Reporting tool and detailing all data sources, formats and modalities. (Courtesy of the Contrapuntal Project)

A human architecture

While the output is digital, the engine of Contrapuntal is intensely human. The project is powered by a massive collaboration of artists, archivists and a fleet of students who are not just coding, but encoding ethics into the system.

Principal Investigators Laila Shereen Sakr, Cathy Thomas

Developers & AI Ethics Calais Waring, Winston Zuo, Josh Bevan, Sierra Peltcher, Cass Mayeda

Student Research Unit Anna Shahverdyan, Calista Dollaga, Corinna Kelley, Elisa Coccioli, Henry Patrick Coburn, Jiyoo Kim-Jung, Kamaya Jackson, Lexxus Edison, Ripley Baker, Saide Kamille Singh, Sofia Mosqueda

This team serves as the "human data programmers," working to ensure that the platform offers a counter-narrative to the silence of the black box.