By Romy Hildebrand

Commercial algorithms that are used within the criminal justice system to inform court decisions are inaccurate and contain underlying racial bias, UC Berkeley electrical engineering and computer science professor Hany Farid told a UC Santa Barbara audience last week. “But the real issue is that there is a bias in society,” he said.

“It’s a little bit of a chicken and egg problem. I don’t know if the algorithms can directly address this. I think it is a much deeper societal problem.”

Farid joined Sharad Goel, a Stanford engineering professor, for this year’s first public lecture in a series hosted by UCSB’s Mellichamp Initiative in Mind and Machine Intelligence. The initiative is a cross-disciplinary effort to understand the capabilities of the human mind and artificial intelligence (AI). The Zoom event was co-sponsored by The Center for Responsible Machine Learning, The Sage Center for the Study of the Mind, and The Center for Information Technology and Society.

Farid and Goel discussed the accuracy, fairness, and limits of the use of artificial intelligence and machine learning in the sphere of criminal justice.

Hany Farid, UC Berkeley electrical engineering and computer science professor, spoke with UCSB students last week about the use of artificial intelligence in the sphere of criminal justice.

Farid presented the research of a former student and mentee of his, Julia Dressel, which analyzed commercial software being used in U.S. courts today to predict recidivism— whether a person convicted of a crime will reoffend in the future— and its bias against people of color.

The software in question works by generating a recidivism risk score, a number between 1 and 10, for individuals convicted of a crime, which can be used by court judges to inform pre-trial, trial, and post-trial decisions. The algorithm produced mistakes in these recidivism risk scores, that work against people of color.

Specifically, the AI was much more likely to falsely predict recidivism for individuals of color, and at the same time was far more likely to fail to predict recidivism for white people. The software more often predicted that people of color would reoffend when in reality they did not, and more often predicted that white people would not reoffend, when in reality they did.

Farid’s student Dressel’s research first compared the accuracy of the algorithm to the accuracy of human decision making. There were two key findings. First, humans were just as accurate as the algorithm. Second, humans were also just as biased, even though race was not among the information given to study participants.

“That really puzzled us,” Farid said. “How is it that the commercial software and random internet users basically have the same accuracy? How is it that participants are not race-blind when they don't know the race?”

With further research, Farid and his student were able to answer these questions.

They concluded that although neither the algorithm nor study participants were given information on an individual’s race, the information provided on prior crimes acted as a proxy for race. “In this country, because of social and economic and judicial disparities, if you are a person of color, you are significantly more likely to be arrested, charged, and convicted,” Farid said. “So, if you are looking at prior crimes as a big indicator of recidivism, race is creeping into your data through a proxy of number of crimes.”

This is also why the commercial software and random study participants yielded almost the same accuracy rate— only about 65%. “The prediction is simple,” Farid explained. “If all you are doing, either implicitly or explicitly, is looking at the rate at which you are committing crimes, it is a pretty simple predictor. You can get to 65% [accuracy] pretty easily.”

Even though the accuracy of AI compares to that of human decision making, this does not make it an adequate replacement, he said. “The problem with that approach is the sense of authoritative voice. Judges would take the AI more seriously than random people,” Farid noted. “These commercial algorithms, despite hiding behind proprietary software, are in effect extremely simple, and that simplicity reveals where the inaccuracy and the bias comes from.”

Farid expressed his concern about whether the AI technology can be improved to a level of accuracy that would warrant its use in situations where people’s civil liberties are at stake. But, Farid and co-panelist Sharad Goel say these algorithms can still be applied in other ways to positive effect.

Sharad Goel, the founder and director of Stanford’s Computational Policy Lab, which develops technology to tackle pressing issues in criminal justice, education, voting rights, and more.

Goel argued that algorithms in the criminal justice system are too often applied toward punitive measures rather than as supportive tools to improve outcomes.

He is the founder and director of Stanford’s Computational Policy Lab, a group that develops technology to tackle pressing issues in criminal justice, education, voting rights, and beyond.

Currently, Goel is working with the Santa Clara Public Defender’s Office to develop a platform to deliver personalized reminders for clients with upcoming court dates, and offer free door-to-door rideshare transportation to court. “When allocating limited transportation resources, it is particularly important to identify those individuals who are likely to benefit the most,” Goel said. “This prediction problem is particularly difficult for humans, but plays to the strengths of statistical models.”

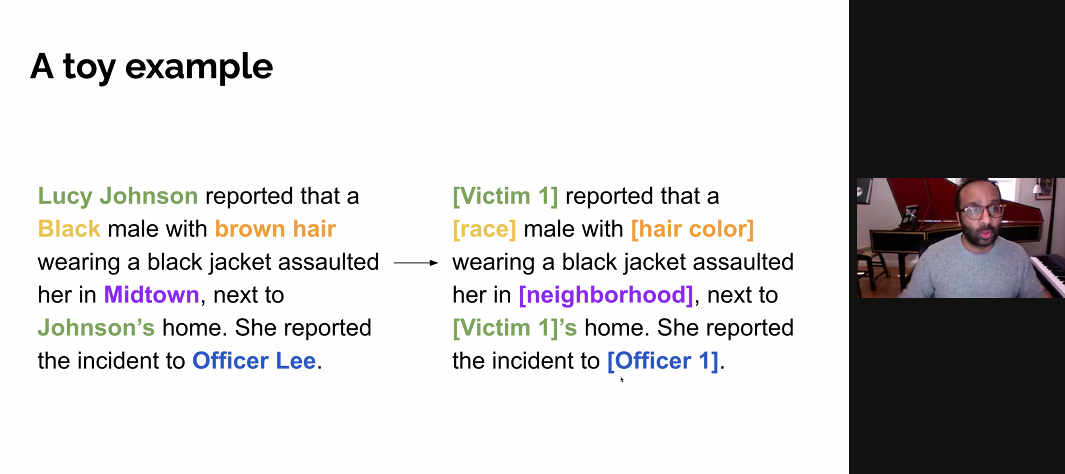

Stanford’s Sharad Goel showed a UCSB audience how his natural language processing tool can mask race-related indicators in police reports.

In another project, his team developed a natural language processing tool that contributes to race-blind judicial decision making by masking race-related indicators in police reports. The platform has been used by the San Francisco District Attorney’s Office to review incoming felony arrests when deciding whether or not to file charges.

Very simple machine learning that has been around for some time now can be used to help reduce incarceration, Goel explained. “I would like those algorithms, with proper transparency and oversight, to be deployed when appropriate,” he said, urging more positive future applications of AI in the criminal justice system.

Romy Hildebrand is a third year Communication major at UC Santa Barbara. She is a Web and Social Media Intern for the Division of Humanities and Fine Arts.